A few thingz

Joseph Basquin

15/02/2026

#ai

The Content Overflow Era – the end of the Long Tail?

What follows might be trivial by now, but it is always good to put a word on it. I'm speaking about media content in general: books, music, website articles, soon videos, and so on.

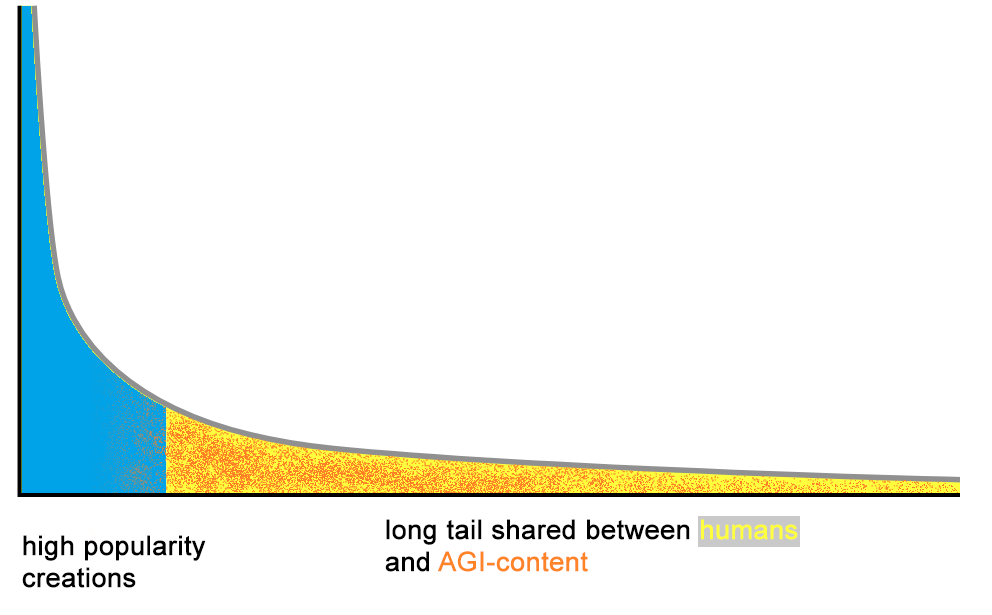

Here is what the "Long Tail" is now evolving into (see Period #2 if you're unfamiliar):

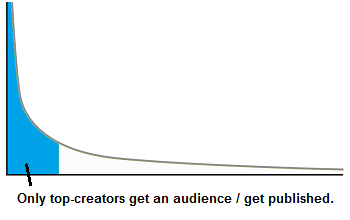

Period #1 – Pre-Internet era

Limited published content, for at least these reasons:

- fewer creators because complexity to create (e.g. access to a recording studio is expensive in the pre-home-studio era)

- curation done by editing/publishing companies is a heavy barrier for creators

- physical limitations: storage isn't infinite in book/music shops, worldwide access to content is complex because requires physical shipping

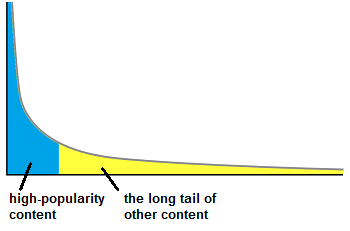

Period #2 – The Long Tail 2000-2022

The Long Tail concept has been popularized by Chris Anderson (2004, 2006). Notable aspects:

- easier means of creation (e.g. affordable home studios)

- no more physical limitation : nearly infinite digital storage for online platforms, easy worldwide access without physical shipping (internet)

Consequence: at this period in time, it was possible for a human producing original content (that arrived in the long tail) to exist as creator, to get its content read/listened to, by other humans. This also led to economic viability of niche products for (some) creators.

Period #3 – Content Overflow 2022-?

- illimited creation by bots (AI-generated websites for SEO purposes, AI-generated music on Deezer)

- curation-less "automated" platforms/search-engines become less relevant:

- on tech discussion channels, everyone complains about Google's decreasing capacity to find relevant results (flood of SEO-content)

- if no change, Spotify might become similarly uninteresting. See also Deezer Says 10,000+ AI Tracks Are Being Uploaded Daily.

Consequence for small creators: Humans creating content, but which are not in the top celebrities, will have increasing difficulties to get their content read/listened to by other humans, because they will be in the same too-long tail than AI-generated content.

Possible outcomes

-

human small creators not able anymore to get an audience? (can't compete with flood of AGI)

-

decrease of interest for curation-less platforms (Google, Spotify, ...) and rise of human-curated platforms (Reddit + new ones)?

- or, alternatively, 90% of people don't really care and will indistinguishably consume AI-generated or human-generated content on big platforms (acceptation of lower standards), so no major change for platforms

About me: I am Joseph Basquin, maths PhD. I create products such as SamplerBox, YellowNoiseAudio, Jeux d'orgues, this blogging engine...

I do freelancing: Software product design / Python / R&D / Automation / Embedded / Audio / Data / UX / MVP. Send me an email.

Python + TensorFlow + GPU + CUDA + CUDNN setup with Ubuntu

Every time I setup Python + TensorFlow on a new machine with a fresh Ubuntu install, I have to spend some time again and again on this topic, and do some trial and error (yes I'm speaking about such issues). So here is a little HOWTO, once for all.

Important fact: we need to install the specific version number of CUDA and CUDNN relative to a particular version of TensorFlow, otherwise it will fail, with errors like libcudnn.so.7: cannot open shared object file: No such file or directory.

For example, for TensorFlow 2.3, we have to use CUDA 10.1 and CUDNN 7.6 (see here).

Here is how to install on a Ubuntu 18.04:

pip3 install --upgrade pip # it was mandatory to upgrade for me

pip3 install keras tensorflow==2.3.0

wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64/cuda-ubuntu1804.pin

sudo mv cuda-ubuntu1804.pin /etc/apt/preferences.d/cuda-repository-pin-600

sudo apt-key adv --fetch-keys https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64/7fa2af80.pub

sudo add-apt-repository "deb https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64/ /"

sudo add-apt-repository ppa:graphics-drivers/ppa

sudo apt-get update

sudo apt install cuda-10-1 nvidia-driver-430To test if the NVIDIA driver is properly installed, you can run nvidia-smi (I noticed a reboot was necessary).

Then download "Download cuDNN v7.6.5 (November 5th, 2019), for CUDA 10.1" on https://developer.nvidia.com/rdp/cudnn-archive (you need to create an account there), and then:

sudo dpkg -i libcudnn7_7.6.5.32-1+cuda10.1_amd64.deb That's it! Reboot the computer, launch Python 3 and do:

import tensorflow

tensorflow.test.gpu_device_name() # also, tensorflow.test.is_gpu_available() should give TrueThe last line should display the right GPU device name. If you get an empty string instead, it means your GPU isn't used by TensorFlow!

Notes:

-

Initially the installation of CUDA 10.1 failed with errors like:

The following packages have unmet dependencies: cuda-10-1 : Depends: cuda-toolkit-10-1 (>= 10.1.243) but it is not going to be installedon a fresh Xubuntu 18.04.5 install. Trying to install

cuda-toolkit-10-1manually led to other similar errors. Using asources.listfrom Xubuntu 18.04 like this one helped. -

I also once had errors like

Could not load dynamic library 'libcublas.so.10'; dlerror: libcublas.so.10: cannot open shared object file: No such file or directory. After searching this file on the filesystem, I noticed it was found in/usr/local/cuda-10.2/...whereas I never installed the 10.2 version, strange! Solution given in this post:sudo apt install --reinstall libcublas10=10.2.1.243-1 libcublas-dev=10.2.1.243-1. IIRC, these 2 issues weren't present when I used a Xubuntu 18.04, could the fact I used 18.04.5 be the reason? - Nothing really related, but when installing Xubuntu 20.04.2.0, memtest86, which is quite useful to test the integrity of the hardware before launching long computations, did not work.